Chapter 1 Introduction

In recent years, there has been an exponential growth in the amount of data available for computational analysis, including scientific data as well as common data types such as text, images, and audio. This abundance of data has empowered various fields, including physics, chemistry, computational social sciences, and biology, to make significant progress using machine learning techniques, primarily deep neural networks. As deep neural networks can effectively summarize and extract patterns from large data sets, they are suitable for many complex tasks. Initially, deep neural networks have been developed to learn from data supported on regular (Euclidean) domains, such as grids in images, sequences of text and time-series. These models, including convolutional neural networks (CNNs) (LeCun et al. 1998; Krizhevsky, Sutskever, and Hinton 2012; Simonyan and Zisserman 2014), recurrent neural networks (RNNs) (Bahdanau, Cho, and Bengio 2014; Sutskever, Vinyals, and Le 2014) and transformers (Vaswani et al. 2017), have proven highly effective in processing such Euclidean data (Goodfellow et al. 2016), resulting in unprecedented performance in various applications, most recently in chat-bots (e.g., ChatGPT (Adesso 2023)) and text-controlled image synthesis (Rombach et al. 2022).

However, scientific data in various fields are often structured differently and are not supported on regular Euclidean domains. As a result, adapting deep neural networks to process this type of data has been a challenge. Against this backdrop, geometric deep learning (GDL) (Zonghan Wu et al. 2020; Zhou et al. 2020; Bronstein et al. 2021) has emerged as an extension of deep learning models to non-Euclidean domains. To achieve this, GDL restricts the performed computations via principles of geometric regularity, such as symmetries, invariances, and equivariances. The GDL perspective allows for appropriate inductive biases to be imposed when working with arbitrary data domains, including sets (Qi et al. 2017; Rempe et al. 2020; H. Deng, Birdal, and Ilic 2018; Y. Zhao et al. 2022; Jiahui Huang et al. 2022), grids (Boscaini et al. 2015, 2016; Masci et al. 2015; Kokkinos et al. 2012; Shuman, Ricaud, and Vandergheynst 2016; Zhirong Wu et al. 2015; Monti et al. 2017), manifolds (Boscaini et al. 2015, 2016; Masci et al. 2015; Kokkinos et al. 2012; Shuman, Ricaud, and Vandergheynst 2016; Zhirong Wu et al. 2015; Monti et al. 2017), and graphs (Scarselli et al. 2008; Gallicchio and Micheli 2010; Zhou et al. 2020; Zonghan Wu et al. 2020; Boscaini et al. 2016; Monti et al. 2017; Bronstein et al. 2017; Kipf and Welling 2016). Graphs, in particular, have garnered interest due to their applicability in numerous scientific studies and their ability to generalize conventional grids. Accordingly, the development of graph neural networks (GNNs) (Bronstein et al. 2017; Kipf and Welling 2016) has remarkably enhanced our ability to model and analyze several types of data in which graphs naturally appear.

Despite the success of GDL and GNNs, seeing graphs through a purely geometric viewpoint yields a solely local abstraction and falls short of capturing non-local properties and dependencies in data. Topological data, including interactions of edges (in graphs), triangles (in meshes) or cliques, arise naturally in an array of novel applications in complex physical systems (Battiston et al. 2021; Lambiotte, Rosvall, and Scholtes 2019), traffic forecasting (W. Jiang and Luo 2022), social influence (Zhu et al. 2018), protein interaction (Murgas, Saucan, and Sandhu 2022), molecular design (Schiff et al. 2020), visual enhancement (Efthymiou et al. 2021), recommendation systems (La Gatta et al. 2022), and epidemiology (S. Deng et al. 2020). To natively and effectively model such data, we are bound to go beyond graphs and consider qualitative spatial properties remaining unchanged under some geometric transformations. In other words, we need to consider the topology of data (G. Carlsson 2009) to formulate neural network architectures capable of extracting semantic meaning from complex data.

One approach to extract more global information from data is to go beyond graph-based abstractions and consider extensions of graphs, such as simplicial complexes, cell complexes, and hypergraphs, generalizing most data domains encountered in scientific computations (Bick et al. 2021; Battiston et al. 2020; Benson, Gleich, and Higham 2021; Torres et al. 2021). The development of machine learning models to learn from data supported on these topological domains (Feng et al. 2019; Bunch et al. 2020; T. Mitchell Roddenberry, Schaub, and Hajij 2022; Schaub et al. 2020, 2021; Billings et al. 2019; Hacker 2020; Hajij, Istvan, and Zamzmi 2020; Ebli, Defferrard, and Spreemann 2020; T. Mitchell Roddenberry, Glaze, and Segarra 2021; L. Giusti, Battiloro, Testa, et al. 2022; Yang and Isufi 2023) is a rapidly growing new frontier, to which we refer hereafter as topological deep learning (TDL). TDL intertwines several research areas, including topological data analysis (TDA) (Edelsbrunner and Harer 2010; G. Carlsson 2009; Dey and Wang 2022a; Love et al. 2023a; Ghrist 2014), topological signal processing (Schaub and Segarra 2018; Yang et al. 2021; Schaub et al. 2022; T. Mitchell Roddenberry, Schaub, and Hajij 2022; Barbarossa and Sardellitti 2020a; Robinson 2014; Sardellitti and Barbarossa 2022), network science [Skardal et al. (2021); Lambiotte, Rosvall, and Scholtes (2019); Barabási (2013); Battiston et al. (2020); Bick et al. (2021); Bianconi (2021); Benson, Gleich, and Leskovec (2016); De Domenico et al. (2016); Bao et al. (2022); Oballe et al. (2021)}, and geometric deep learning (S.-X. Zhang et al. 2020; Cao et al. 2020; Fey and Lenssen 2019; Loukas 2019; P. W. Battaglia et al. 2018; Morris et al. 2019; P. Battaglia et al. 2016).

Despite the growing interest in TDL, a broader synthesis of the foundational principles of these ideas has not been established so far. We argue that this is a deficiency that inhibits progress in TDL, as it makes it challenging to draw connections between different concepts, impedes comparisons, and makes it difficult for researchers of other fields to find an entry point to TDL. Hence, in this paper, we aim to provide a foundational overview over the principles of TDL, serving not only as a unifying framework for the many exciting ideas that have emerged in the literature over recent years, but also as a conceptual starting point to promote the exploration of new ideas. Ultimately, we hope that this work will contribute to the accelerated growth in TDL, which we believe would be a key enabler of transferring deep learning successes to an enlarged range of application scenarios.

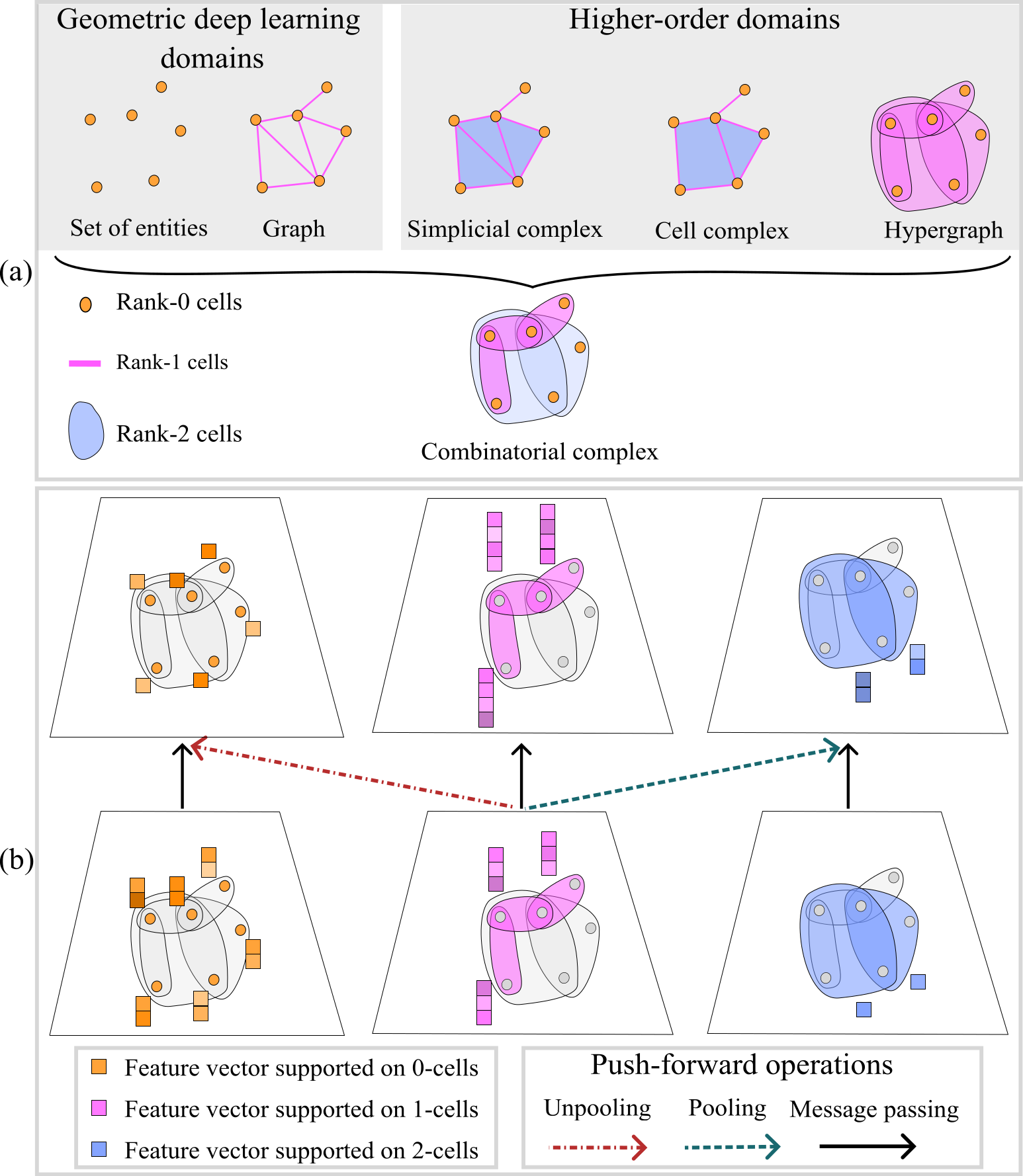

By drawing inspiration from traditional topological notions in algebraic topology (Ghrist 2014; Hatcher 2005) and recent advancements in higher-order networks (Battiston et al. 2020, 2021; Torres et al. 2021; Bick et al. 2021), we first introduce combinatorial complexes (CCs) as the major building blocks of our TDL framework. CCs constitute a novel topological domain that unifies graphs, simplicial and cell complexes, and hypergraphs as special cases, as illustrated in Figure 1.11. Similar to hypergraphs, CCs can encode arbitrary set-like relations between collections of abstract entities. Moreover, CCs permit the construction of hierarchical higher-order relations, analogous to those found in simplicial and cell complexes. Hence, CCs generalize and combine the desirable traits of hypergraphs and cell complexes.

FIGURE 1.1: A graphical abstract that visualizes our main contributions. (a): Different mathematical structures can be used to represent relations between abstract entities. Sets have entities with no connections, graphs encode binary relations between vertices, simplicial and cell complexes model hierarchical higher-order relations, and hypergraphs accommodate arbitrary set-type relations with no hierarchy. We introduce combinatorial complexes (CCs), which generalize graphs, simplicial and cell complexes, and hypergraphs. CCs are equipped with set-type relations as well as with a hierarchy of these relations. (b): By utilizing the hierarchical and topological structure of CCs, we introduce the push-forward operation, a fundamental building block for higher-order message-passing protocols and for (un)pooling operations on CCs. Our push-forward operations on CCs enable us to construct combinatorial complex neural networks (CCNNs), which provide a general conceptual framework for topological deep learning on higher-order domains.

In addition, we introduce the necessary operators to construct deep neural networks for learning features and abstract summaries of input anchored on CCs. Such operators provide convolutions, attention mechanisms, message-passing schemes as well as the means for incorporating invariances, equivariances, or other geometric regularities. Specifically, our novel push-forward operation allows pushing data across different dimensions, thus forming an elementary building block upon which higher-order message-passing protocols and (un)pooling operations are defined on CCs. The resulting learning machines, which we call combinatorial complex neural networks (CCNNs), are capable of learning abstract higher-order data structures, as clearly demonstrated in our experimental evaluation.

We envisage our contributions to be a platform encouraging researchers and practitioners to extend our CCNNs, and invite the community to build upon our work to expand TDL on higher-order domains. Our contributions, which are also visually summarized in Figure 1.1, are the following:

- First, we introduce CCs as domains for TDL. We characterize CCs and their properties, and explain how they generalize major existing domains, such as graphs, hypergraphs, simplicial and cell complexes. CCs can thus serve as a unifying starting point that enables the learning of expressive representations of topological data.

- Second, using CCs as domains, we construct CCNNs, an abstract class of higher-order message-passing neural networks that provide a unifying blueprint for TDL models based on hypergraphs and cell complexes.

- Based upon a push-forward operator defined on CCs, we introduce convolution, attention, pooling and unpooling operators for CCNNs.

- We formalize and investigate permutation and orientation equivariance of CCNNs, paving the way to future work on geometrization of CCNNs.

- We show how CCNNs can be intuitively constructed via graphical notation.

- Third, we evaluate our ideas in practical scenarios.

- We release the source code of our framework as three supporting Python libraries: TopoNetX, TopoEmbedX and TopoModelX.

- We show that CCNNs attain competing predictive performance against state-of-the-art task-specific neural networks in various applications, including shape analysis and graph learning.

- We establish connections between our work and classical constructions in TDA, such as the mapper (Singh et al. 2007). Particularly, we realize the mapper construction in terms of our TDL framework and demonstrate how it can be utilized in higher-order (un)pooling on CCs.

- We demonstrate the reducibility of any CC to a special graph called the Hasse graph. This enables the characterization of certain aspects of CCNNs in terms of graph-based models, allowing us to reduce higher-order representation learning to graph representation learning (using an enlarged computational graph).

Glossary. Prior to delving into more details, we present the elementary terminologies of already established concepts used throughout the paper. Some of these terms are revisited formally in Chapter 3. Appendix A provides notation and terminology glossaries for novel ideas put forward by the paper.

Cell complex: a topological space obtained as a disjoint union of topological disks (cells), with each of these cells being homeomorphic to the interior of a Euclidean ball. These cells are attached together via attaching maps in a locally reasonable manner.

Domain: the underlying space where data is typically supported.

Entity or vertex: an abstract point; it can be thought as an element of a set.

Graph or network: a set of entities (vertices) together with a set of edges representing binary relations between the vertices.

Hierarchical structure on a topological domain: an integer-valued function that assigns a positive integer (rank) to every relation in the domain such that higher-order relations are assigned higher rank values. For instance, a simplicial complex admits a hierarchical structure induced by the cardinality of its simplices.

Higher-order network/topological domain: generalization of a graph that captures (binary and) higher-order relations between entities. Simplicial/cell complexes and hypergraphs are examples of higher-order networks.

Hypergraph: a set of entities (vertices) and a set of hyperedges representing binary or higher-order relations between the vertices.

Message passing: a computational framework that involves passing data, `messages’, among neighbor entities in a domain to update the representation of each entity based on the messages received from its neighbors.

Relation or cell: a subset of a set of entities (vertices). A relation is called binary if its cardinality is equal to two. A relation is called higher-order if its cardinality is greater than two.

Set-type relation: a higher-order network is said to have set-type relations if the existence of a relation is not implied by another relation in the network; e.g., hypergraphs admit set-type relations.

Simplex: a generalization of a triangle or tetrahedron to arbitrary dimensions; e.g., a simplex of dimension zero, one, two, or three is a point, line segment, triangle, or tetrahedron, respectively.

Simplicial complex: a collection of simplices such that every face of each simplex in the collection is also in the collection, and the intersection of any two simplices in the collection is either empty or a face of both simplices.

Topological data: feature vectors supported on relations in a topological domain.

Topological deep learning: the study of topological domains using deep learning techniques, and the use of topological domains to represent data in deep learning models.

Topological neural network: a deep learning model that processes data supported on a topological domain.

References

All figures in this paper should be displayed in color, as different colors communicate different pieces of information.↩︎